This is why AI assisted keywording only occasionally fits as part of DAM workflows

Wouldn’t it be nice if just by uploading images and videos to your media library the AI-assisted DAM system would automatically categorise and tag them and make them searchable?

So why is computer vision, despite major development steps, still not relevant in media bank workflows in 2021?

Amazing technology presented already 5 years ago

Back in 2016, I uploaded some images into a service during a presentation on the DAM Helsinki 2016 conference, and it showed these amazing tagging results then. The audience replied with applause and were impressed on how live tagging could be presented.

The service I used back then was called EyeEm Vision. EyeEm is one of the world’s leading photography marketplaces and communities with more than 20 million users. Using computer vision technology and facial recognition, the software tags photographs and video as they are imported.

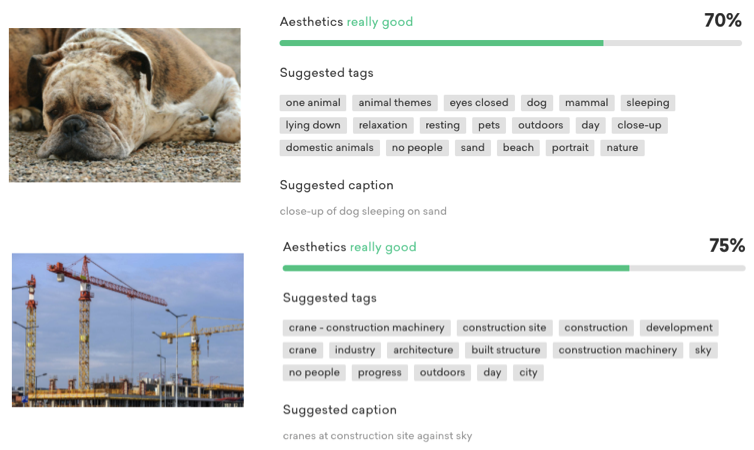

The resulting tags of my images were divided into not only keywords, but amazingly also by aesthetics and descriptions, or captions, if you prefer. EyeEm Vision, used a deep learning computer vision framework that attempts to identify and rank images by aesthetics and concept.

Caption results from the images, like “close-up of dog sleeping on sand” and “crane at construction site against sky” includes more information than todays cloud based out-of-the box image regognition keywording in DAM deliver, not to mention adding the aesthetic value to the image.

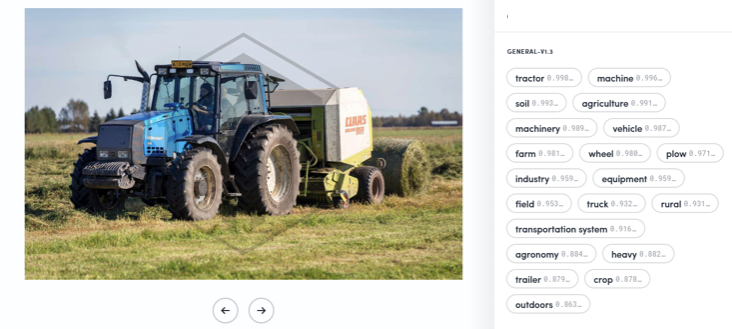

Often a successful keywording in DAM look like this tagging (Clarifai) result from any cloud image recognition provider, like Google Vision, Amazon Rekognition, Azure Cognitive Services, Imagga and Clarifai, among several others.